Anthropic and Pentagon split on AI targeting and U.S. surveillance

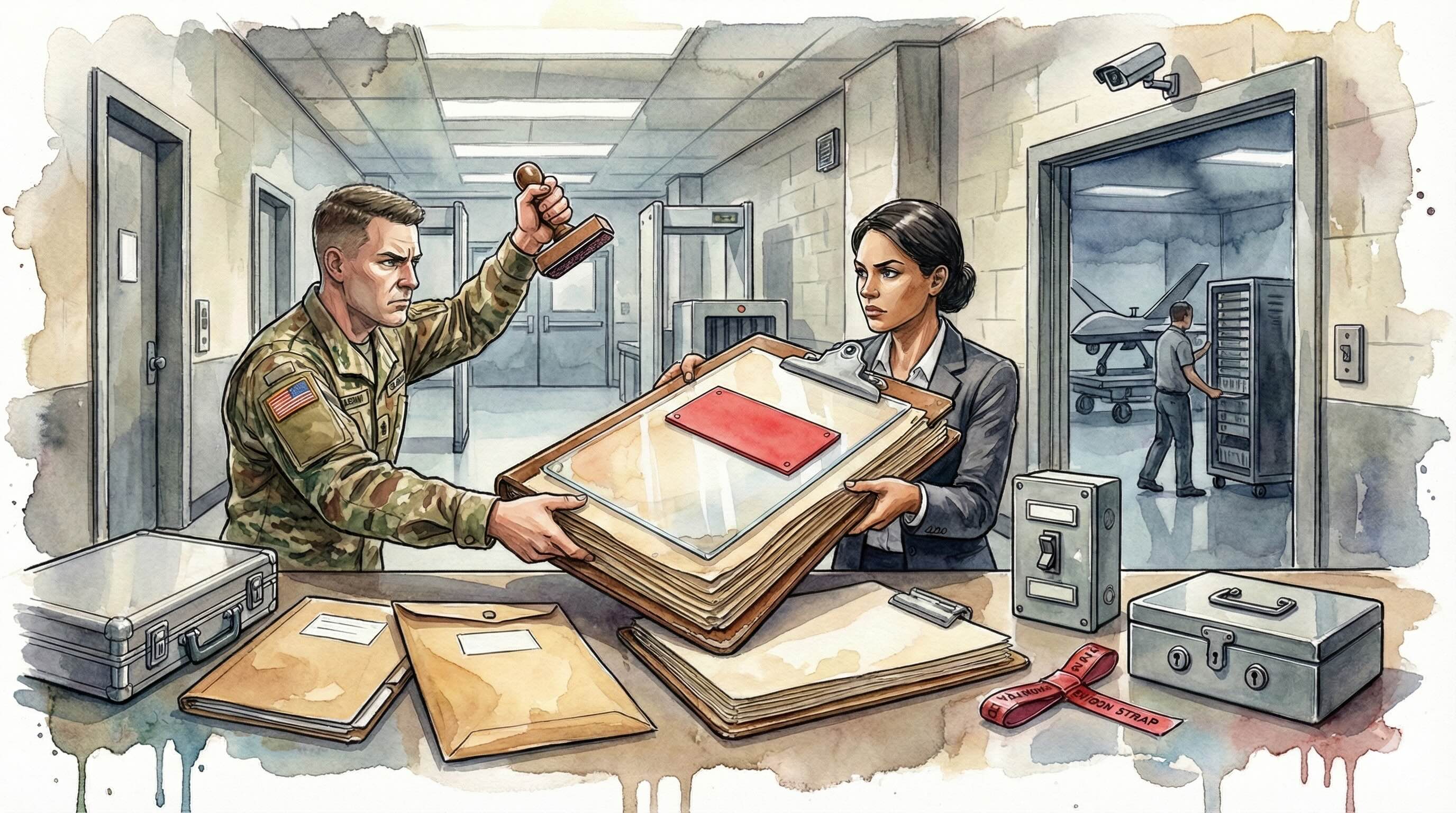

After weeks of talks, the Pentagon and Anthropic remain at odds over easing AI limits that could permit autonomous targeting and U.S. domestic surveillance, Reuters reports.

Talks between the Pentagon and AI developer Anthropic have stalled over whether to relax safeguards on the company’s systems, a change that people involved say could open the door to autonomous weapons targeting and U.S. domestic surveillance, Reuters reports. After several weeks of negotiations, the parties remain apart on contract terms governing sensitive uses.

Individuals briefed on the talks described a dispute over whether government users could override Anthropic’s usage policies when an operation is lawful under U.S. statutes. Defense officials have pressed for flexibility to deploy commercial AI across missions despite vendor prohibitions on targeting and surveillance applications.

Officials have cited a Jan. 9 Defense Department memo on AI strategy to support the view that the department can field commercial tools as long as activities comply with U.S. law. Anthropic has resisted language that would waive or weaken its safeguards, arguing the controls are integral to how its systems are built and managed, according to these people.

Anthropic is one of several AI providers under evaluation for national security work. The impasse has slowed progress on a potential agreement following multiple negotiating sessions this month.

Several people familiar with the process said the company’s policies have fueled disagreements with the Trump administration, which is pushing faster adoption of commercial AI across defense and intelligence programs. The parties have not reached common ground on acceptable uses.

The Pentagon did not provide a comment in response to inquiries. In a statement, Anthropic wrote that its AI is “extensively used for national security missions by the U.S. government and we are in productive discussions with the Department of War about ways to continue that work.”

Discussions have examined operational details, including whether federal users could disable safety features in limited cases, how auditing and oversight would function if company restrictions stay in place, and who would authorize time-sensitive targeting or surveillance tasks. Both sides have explored technical and policy options but have not agreed on binding terms.

Anthropic’s view, according to those familiar, is that weakening safeguards for any customer could create broader risks and complicate compliance across its user base. The company has maintained that consistent enforcement of its policies is necessary to prevent misuse.

While discussions are ongoing, the stalemate has delayed a deal, and it is unclear whether the Pentagon will shift to other vendors or return to the table with revised terms.

As we covered previously, Anthropic, maker of the Claude models, is discussing a roughly $10 billion round that could lift its valuation to about $350 billion, with potential lead investors GIC and Coatue. On those terms, the valuation would nearly double from September 2025.

The material on GNcrypto is intended solely for informational use and must not be regarded as financial advice. We make every effort to keep the content accurate and current, but we cannot warrant its precision, completeness, or reliability. GNcrypto does not take responsibility for any mistakes, omissions, or financial losses resulting from reliance on this information. Any actions you take based on this content are done at your own risk. Always conduct independent research and seek guidance from a qualified specialist. For further details, please review our Terms, Privacy Policy and Disclaimers.